Making Sense of the Modern AI Ecosystem: Tools, Terminologies, and How They Interconnect

Artificial intelligence has entered a new era of rapid expansion, driven by advances in large language models (LLMs), generative AI, and automation frameworks. This progress has unleashed an overwhelming array of tools and terminologies—AI models, Agentic agents, RAG, MCP, Webhooks, workflow automation, and vector databases, to name a few. For many organizations, this landscape appears fragmented, filled with overlapping capabilities and constant change.

5 min read

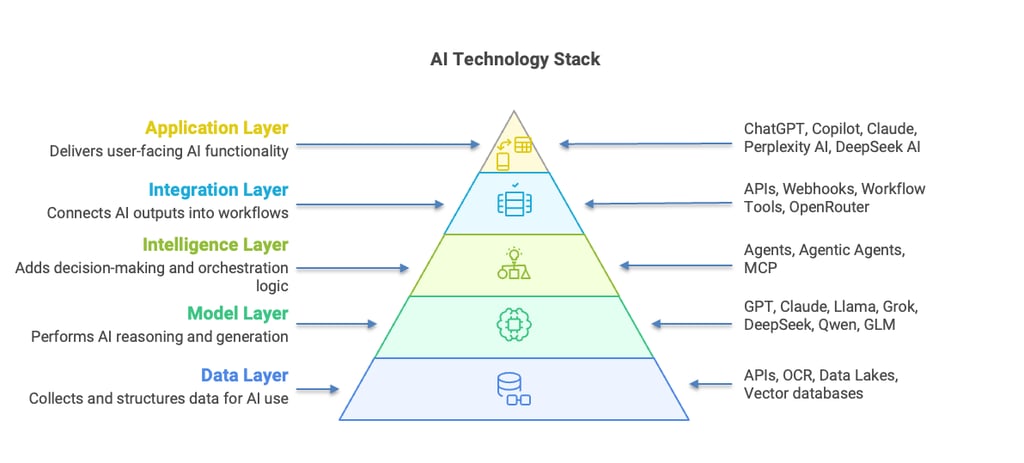

This paper provides a structured way to understand the AI ecosystem as an interconnected architecture. By viewing AI through a five-layer technology backbone—Data, Model, Intelligence, Integration, and Application—we can see how each component serves a specific role and how they work together to transform information into intelligent action. The paper also concludes with practical guidance on how to navigate this complexity, adopt AI responsibly, and integrate it strategically for meaningful outcomes.

1. The AI Technology Backbone

Every AI system, no matter how advanced, can be understood through five foundational layers. At the foundation lies the Data Layer, which gathers and prepares the information that fuels every intelligent process. This includes tools for data ingestion, optical character recognition (OCR), APIs, and vector databases that convert unstructured content into structured formats that AI models can understand.

Above this sits the Model Layer, where machine learning and deep learning models perform reasoning, prediction, or content generation. This includes large language models such as GPT, Claude, or Gemini, computer vision systems like CLIP and SAM, and speech models like Whisper or text-to-speech engines such as ElevenLabs.

The third layer, the Intelligence Layer, represents the orchestration of AI logic. This is where frameworks like LangChain, LangGraph, and the Model Context Protocol (MCP) come into play—helping models connect with external data sources, perform reasoning, and execute complex workflows through agentic behavior. AI agents themselves operate within this layer, using reasoning and memory to break tasks into smaller goals and act autonomously.

The Integration Layer connects these intelligent components with the real world. Through APIs, webhooks, workflow tools like n8n or Zapier, and unified routing platforms such as OpenRouter, AI becomes embedded into enterprise systems and operational workflows.

Finally, the Application Layer is where AI meets the end-user. This layer includes chatbots, RAG-powered assistants, and generative AI applications that produce images, voices, or videos. Together, these five layers form a coherent ecosystem—from raw data to user-facing intelligence.

2. Understanding the Components and How They Interrelate

At the heart of the ecosystem are AI models—the core engines of intelligence. These models process language, interpret images, or generate media. Their capabilities, however, depend on the quality and accessibility of data. Without structured and accurate inputs, even the most advanced model can produce unreliable results.

To extend the usefulness of these models, technologies like the Model Context Protocol (MCP) allow them to securely interact with external tools and databases. MCP acts like a universal connector, enabling LLMs to access structured data, trigger actions, or fetch information dynamically.

Within the Intelligence Layer, AI agents emerge as the next evolution. Unlike static models that respond to a single query, agents can plan, reason, and take actions across multiple steps. They use LLMs for cognitive reasoning and frameworks like LangChain or LangGraph for tool orchestration. Agentic systems such as CrewAI or AutoGen even coordinate multiple agents collaboratively, each specializing in different aspects of a larger task.

Retrieval-Augmented Generation (RAG) plays a crucial role in ensuring that AI outputs remain factual and contextually relevant. It combines semantic search with generative reasoning—using a vector database to retrieve relevant information before the model formulates a response. This process keeps outputs grounded in truth, an essential safeguard against hallucination and misinformation.

Meanwhile, Generative AI has captured public imagination by creating new forms of content from natural language prompts. Text-to-image platforms like Midjourney and DALL·E, text-to-voice systems like Play.ht, and text-to-video tools such as Runway and Sora demonstrate how models can transform creative industries. These applications operate at the top of the stack, where AI delivers visible, tangible value to users.

To make AI practical and repeatable, workflow automation tools such as Zapier, n8n, or Make connect models, agents, and business systems. APIs and webhooks ensure seamless communication between components, while OpenRouter and similar gateways provide unified access to multiple models and compute environments. This integration layer is what turns AI prototypes into real operational solutions.

Finally, beneath all these layers lies a vital but often invisible infrastructure: data representations. Embeddings and vector databases allow text, audio, or images to be stored and searched by meaning, not just by keywords. Modular “blocks” or nodes in visual AI platforms (such as Flowise or Dust) allow developers to design data flows intuitively, linking components like Lego pieces to form complex systems.

3. The AI Ecosystem Flow

In practice, an AI system operates as a continuous pipeline. Data from documents, sensors, or APIs is first captured and structured through OCR and embedding models. These embeddings are stored in vector databases, which allow semantic search and retrieval. When a user interacts with the system, an LLM or vision model interprets the query, supported by retrieved knowledge from the database. Agents then orchestrate reasoning, perform actions, or connect to external systems through workflow automation and APIs. The results—whether a report, summary, visual, or insight—are finally delivered through a user-facing application such as a chatbot, dashboard, or media generator.

This data-to-action pipeline defines the rhythm of modern AI systems: information flows upward through the stack, is processed intelligently, and flows back down as actionable results.

4. The Challenge of Complexity and Overlap

The current AI landscape is both vibrant and confusing. Many tools offer similar functions with minor variations, while new frameworks appear almost weekly. This constant evolution can make it difficult to decide where to invest time or resources. Furthermore, most AI systems are still in the early stages of maturity, with varying degrees of reliability, scalability, and interoperability.

This complexity mirrors the early days of cloud computing, when hundreds of competing platforms eventually converged around open standards and dominant architectures. The same pattern is emerging in AI. The key for organizations is not to chase every trend, but to build with clarity—anchoring decisions on stable concepts such as data governance, model flexibility, and modular integration.

5. Practical Guidance for AI Adoption

To navigate this landscape, leaders should focus on building AI systems that are modular, integrated, and grounded in real business value. The first principle is to think in components, not platforms. Avoid committing to single-vendor ecosystems; instead, choose flexible building blocks that can connect through APIs or MCP-compatible interfaces.

Second, data quality remains the cornerstone of effective AI. Models are only as accurate as the data they consume. Invest in data structuring, governance, and provenance before layering advanced intelligence on top.

Third, adopt a layered approach to development. Build sequentially—start by securing the data foundation, then integrate models, add intelligence via agents, and finally embed automation and applications. This structured progression ensures stability and scalability.

Fourth, begin small but scale strategically. Identify one or two high-impact use cases, such as document summarization, knowledge retrieval, or workflow automation. Once value is demonstrated, extend horizontally across departments or functions.

Fifth, integrate AI into workflows, not just interfaces. The greatest value arises when AI directly supports or automates decision processes, rather than existing as a separate tool. Finally, monitor the maturity and consolidation of tools. The market will evolve rapidly; prioritize solutions with open standards, strong developer ecosystems, and proven enterprise use cases.

6. Conclusion

The current AI revolution is as much about architecture as it is about intelligence. Beneath the surface of flashy generative tools lies a structured ecosystem of data pipelines, models, orchestration frameworks, and integrations that together enable machines to reason, create, and act.

Understanding this ecosystem through the five-layer framework—Data, Model, Intelligence, Integration, and Application—provides a foundation for clarity. It transforms AI from a maze of acronyms into a structured, navigable landscape.

In an environment of rapid innovation and inevitable consolidation, success will depend not on adopting every new tool but on building intelligently—grounded in good data, flexible architecture, and practical use cases. The goal is not to chase trends, but to build lasting capability: to turn the complexity of modern AI into a disciplined, purposeful advantage for your organization.

© OpenSME Pte Ltd.